Within computing, it is very common to come across terms such as “32-bit” and “64-bit”. However, have you ever wondered what they actually mean? Grasping these concepts can help one comprehend how they have influenced the current state of computer systems which serve varied purposes.

What do "32-bit" and "64-bit" mean in the context of computer systems?

What are the main differences between 32-bit and 64-bit processors?

What is the significance of 64-bit systems in modern computing?

The Basics: What Do 32-bit and 64-bit Mean?

“The size of the data units that can be handled by a computer’s brain—namely, its processor, can actually be obtained in 32-bit or 64-bit”, what do they exactly mean? Computing has bits as the most elementary units of information, where each part holds either 0 or 1. Hence, when we describe a processor as either 32bit or 64bit; we refer to the volume of data it can process at any given moment, in addition to its addressable memory.

- Thus, 32-bit Processors handle data through 32-bit chunks which means they come together to process thirty-two bits at once,

- while 64-bit Processors handle data through 64-bit chunks and hence are capable of processing more amounts of data concurrently and with greater efficiency.

A Simple History: Leaving 32-Bit Architecture Behind

The transformation from 32-bit to 64-bit systems is one of the significant innovations in computer technology.

32-Bit Processors:

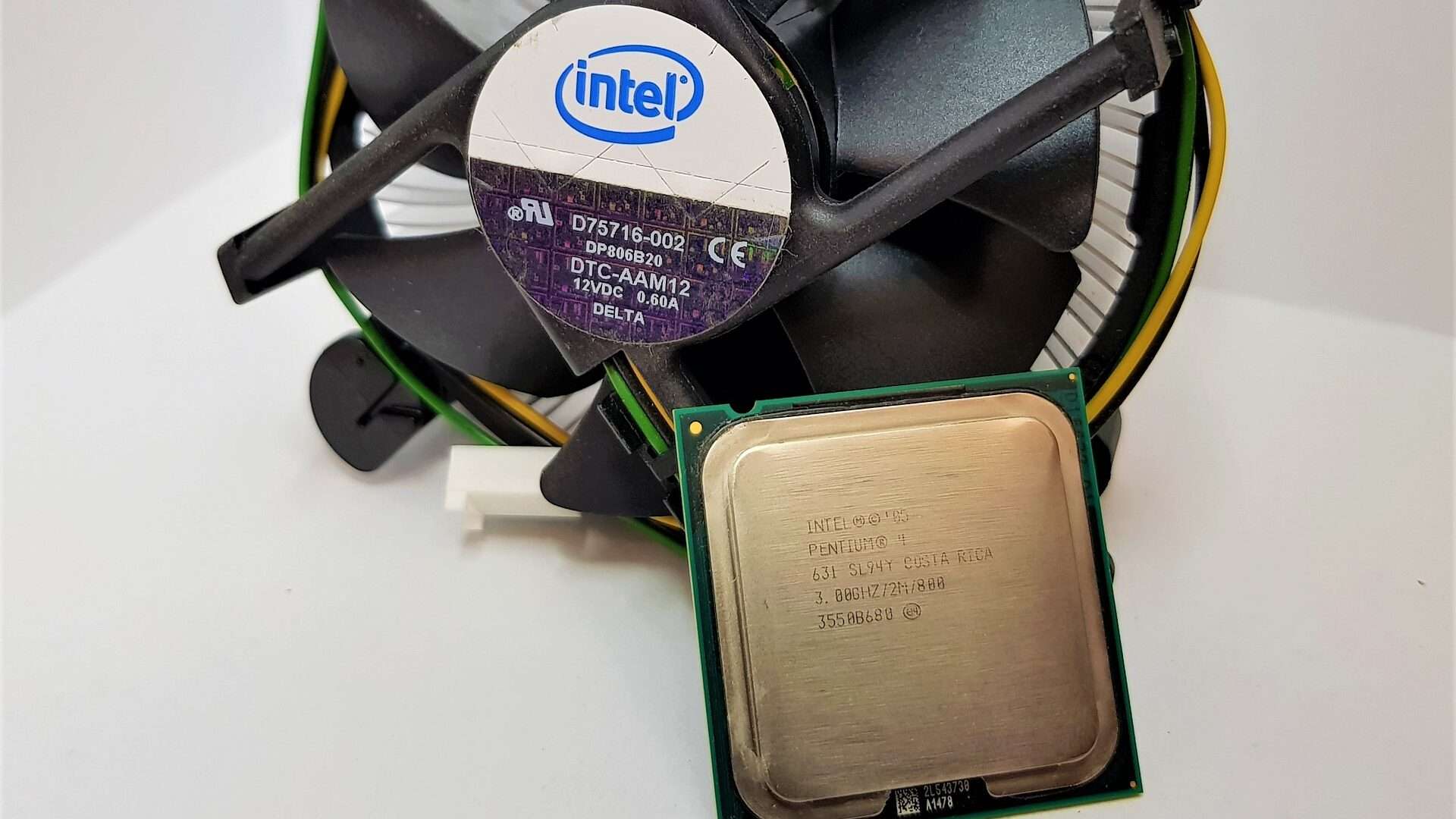

From the 1980s to the late 1990s, personal computers used 32-bit architecture as a standard. Iconic processors like Intel’s 80386 and later Pentium series were based on 32-bit architecture which brought a huge stride forward during that time. These processors could run increasingly complex software, manage multiple tasks at once, and provide the computational power needed for early graphical user interfaces.

Operating systems at this time such as Windows 95, 98, and early versions of Windows XP were specifically designed for 32-bit processors. Because of this demand for memory or processing power didn’t scale up much during those days since typical applications didn’t require such things in the first place.

The Transition to 64-bit Processors:

When software became more complicated and demanded higher memory and processing capabilities, the limitations inherent in 32-bit systems became clear. Thereby resulting in growing demand for greater efficient systems that can accommodate larger memory whereby 64-bit processors came into play.

The first consumer-based computers to utilize 64-bit processors were released around early 2000; AMD’s Athlon 64 led the way in 2003. This marked the beginning of a new epoch when 64-bit architectures started displacing both 32-bit ones in hardware and smooth wares producing this wave of revolutionary transformation.

Apple’s Mac OS X Snow Leopard which was outed in 2009 was an unrivalled example of a full-fledged 64-bit operating system during that period. This showed how much the world was shifting towards a future where the standard computing platform would be one based on 64 bits only then. Then came mobile devices which crowned this desire with a bang by launching iPhone 5s as the first smartphone to use a 64-bit chip in 2014.

The Significance of 32-bit and 64-bit in Modern Computing

The transition from 32-bit to 64-bit computing was not just a matter of incremental improvement; it represented a fundamental shift in how computers handle data and memory.

- 32-bit systems are limited by their ability to address only 4 GB of RAM, which in the context of modern computing, restricts their use to less demanding tasks. They also have fewer security features and cannot efficiently handle the complexities of today’s software.

- 64-bit systems, by contrast, can address far more memory, allowing for faster processing and the ability to run more complex applications. They are also more secure, as they include advanced features that protect against malware and unauthorized system modifications.

Today, 64-bit systems are the norm, offering a robust platform for everything from gaming and professional content creation to scientific computing and big data analysis. If you are confused about which one you should go for, read our other article on this.